And No One’s Asking Them to Slow Down

Once upon a time—roughly five years ago—AI models were rare, exotic creatures. You knew them by name. GPT-2. BERT. ResNet. You could count the important ones without taking your shoes off.

Fast-forward to today and… well… we have a situation.

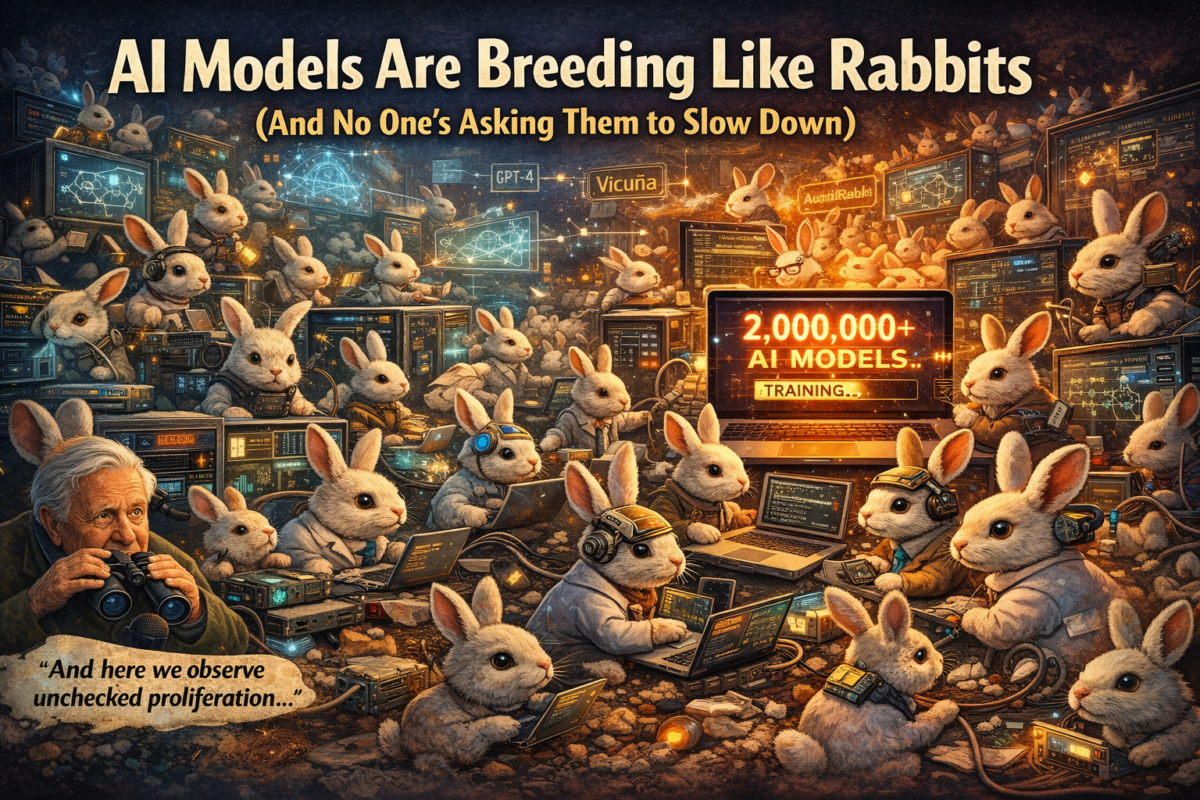

AI models are breeding like rabbits.

Open-weight rabbits. Fine-tuned rabbits. Domain-specific rabbits. Rabbits trained to train other rabbits.

If this were a nature documentary, David Attenborough would lower his voice and say:

“And here we observe the unchecked proliferation phase.”

He’d be right.

From Zoo Exhibit to Infestation

Five years ago:

-

Thousands of notable models

-

Mostly academic

-

Mostly general-purpose

-

Mostly trained by a small handful of institutions with real money and real GPUs

Today:

-

~2 million public models

-

Millions more private

-

Most trained on top of other models

-

Many created by individuals, startups, or bored engineers with a credit card and a deadline

The ecosystem didn’t just grow.

It fractaled.

Every base model spawns:

-

Instruction-tuned variants

-

Role-specific variants

-

“This model but better at one weird thing” variants

It’s less evolution, more mitosis.

The Dirty Secret: Models Training Models

Here’s the part everyone whispers about but rarely puts in bold text:

Most new models are not trained from raw human data anymore.

They’re trained on:

-

Synthetic data

-

Distilled outputs from larger models

-

Ensembles of other models arguing with each other

AI is officially self-referential.

This is not necessarily bad—but it is irreversible.

We’ve crossed the line where:

-

Humans define goals

-

Models generate data

-

Other models learn from that data

-

Humans supervise after the fact

That’s not AI “assisting” humans.

That’s humans auditing an ecosystem.

Satire Moment (Because If We Don’t Laugh…)

Somewhere right now:

-

A model is summarizing another model’s explanation of a third model’s reasoning

-

Which was trained to explain a fourth model

-

That no human ever read end-to-end

Congratulations.

We have invented recursive intelligence bureaucracy.

The future won’t be ruled by a single superintelligence.

It’ll be ruled by thousands of specialized AIs holding meetings about it.

Serious Prediction: The Next 5 Years (2030)

1. Foundation Models Will Plateau

-

Fewer truly new frontier models

-

Marginal gains get more expensive

-

Compute becomes the bottleneck, not ideas

The arms race slows at the top—but explodes below it.

2. Domain Models Will Explode

Expect millions more models, but:

-

Trained for very specific tasks

-

Law, medicine, finance, insurance, logistics

-

Each one smaller, cheaper, faster

Think less “general intelligence,” more digital specialists that never sleep.

3. Model Orchestration Becomes the Product

The real value won’t be the model—it’ll be:

-

Routing

-

Validation

-

Governance

-

Memory

-

Cost control

The winners won’t say “we built a model”

They’ll say “we manage 400 of them without chaos.”

Serious Prediction: The Next 10 Years (2035)

1. Models Will Breed Autonomously (With Guardrails)

-

Systems that detect gaps

-

Generate synthetic training data

-

Train task-specific sub-models automatically

-

Retire underperforming ones

Not sentient.

Not conscious.

But self-maintaining ecosystems.

2. “Training” Becomes Continuous

No more “version 4.1 released.”

Instead:

-

Models adapt incrementally

-

Training never fully stops

-

Drift detection becomes critical

Static models will feel like dial-up internet.

3. Human Data Becomes Sacred

Ironically:

-

Raw human-created data becomes more valuable

-

Clean, verified human knowledge becomes premium fuel

-

Synthetic data dominates volume—but not authority

Humans stop being the workforce.

They become the reference standard.

The Real Takeaway

This isn’t an AI apocalypse.

It’s an AI ecology.

The danger isn’t that models are breeding like rabbits.

It’s pretending they’re still rare zoo animals.

They’re not.

They’re infrastructure now.

And like any fast-breeding species:

-

Ignore them, and they overrun the system

-

Study them, and they become indispensable

-

Manage them well, and they work for you

The future doesn’t belong to the biggest model.

It belongs to whoever knows which rabbit to deploy—and when to put it back in the cage.